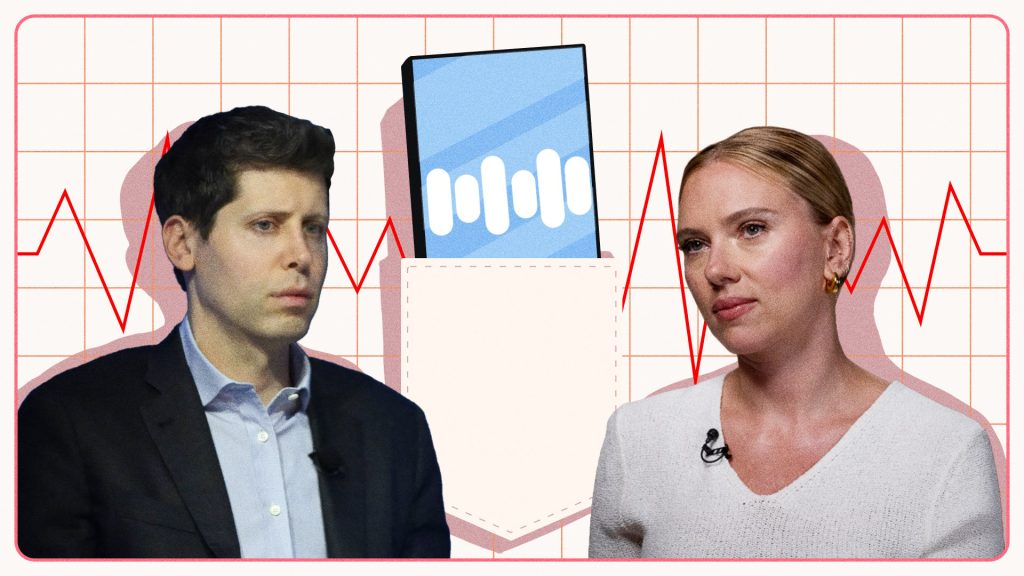

It’s rare that a single feature in a tech product can generate a week’s worth of headlines. But that’s what happened when OpenAI CEO Sam Altman unveiled Sky, one of five voices that read out ChatGPT-powered answers.

In common with many listeners, Scarlett Johansson recognized the voice as hers. Which was strange, Johansson said in an equally rare statement Monday, since Altman contacted her multiple times asking her for permission to use her voice, including once just a few days before the Sky demo. She had not given consent. Her lawyers were seeking answers from OpenAI, which had hurriedly taken Sky down a day before the statement.

As in politics, so in big tech: If you’re explaining, you’re losing. While Redditors piled on in identifying the voice as Johansson, while a U.S. Senator indignantly subtweeted Altman and legislative attempts to rein in AI entered the spotlight, OpenAI spent the next week explaining as best they could. It wasn’t Johansson’s voice, they insisted. Sky was built atop a voice actor that just happened to sound an awful lot like Johansson.

The voice actor herself was wheeled on stage, anonymously, via the Washington Post: becoming the voice of Sky was “honestly kinda scary territory for me as a conventional voice over actor,” she said via her agent, adding this OpenAI-approved statement: “It is an inevitable step toward the wave of the future.”

An inevitable step toward the wave of the future. Boy, wouldn’t it be pretty for OpenAI for you to think so. The company’s eye-popping $90 billion valuation depends on you thinking that — you the influencer, you the investor, you the executive tasked with looking into AI-based cost savings at your company, you the politician thinking about hyping up AI threats, you the general public.

You have to believe AI is improving exponentially, you have to ignore the growing evidence that says it isn’t — or at the very least, you have to think that enough other people around you believe in AI for it not to matter what you think.

And that’s the most interesting thing about the Johansson scandal. At its root, it is not about whether the voice is Johansson’s or not. It’s not about copyright. Case law in California, where both OpenAI and Johansson reside, is quite clear on this matter. In a couple of decisions involving ads made by companies that got actors to imitate Johnny Carson and Bette Midler in ads, courts held that they violated the celebrity’s brands, in effect, for profit.

In other words, it doesn’t help OpenAI even if you know Sky isn’t Scarlett Johansson. What is important is whether enough other people around you would reasonably assume it to be Scarlett Johansson for it not to matter what you think.

One collective hallucination has made a lot of very smart people ignore AI’s hallucinations. Now another, legally important collective hallucination called ScarJo could land OpenAI in a lot of hot water.

Whether or not Johansson pursues legal action, OpenAI’s brand is already showing signs of tarnish from the whole affair. As with the Cybertruck, public perception was best expressed in jokes. “7-Fingered Scarlett Johansson Appears In Video To Express Full-Fledged Approval Of OpenAI” was the perfectly-pitched headline in The Onion.

Translation: we see you, OpenAI. Not just the fact that we all know you would produce a full-fledged ScarJo clone given half the chance. We also see your terrible art output that may have wowed us a year ago, but now we know that it is imitating the work of a lot of other artists, seems to have lost its luster — and seems mostly the preserve of boomers on Facebook.

Why did Altman do it, then?

As self-destructive tweets go, the one Sam Altman sent during a live ChatGPT voice demo on May 13 was at least maximally efficient. Elon Musk took at least three days to utterly tank his reputation at Twitter. Altman did it in three characters.

Simply by typing “her,” the Open AI CEO handed a loaded gun to someone in a prime position to block his new product and tarnish his reputation. So why, then, did he specifically reference that Johansson movie, entirely built around her voice as an AI assistant, in the wake of Sky’s unveiling? Was it arrogance or impulsiveness? Was he telling on himself in more ways than one?

Altman has said repeatedly that Her (2013) is his favorite movie. He talks about it a lot. On a basic level, what Her shows is a lonely nerd Theodore (Joaquin Phoenix) falling in love with voice assistant Samantha (the never-seen Johansson).

On a more meta level, Her seems to fit Altman’s needs to a T. If you wanted to hype up all the future possibilities of ChatGPT-5 and the video AI app Sora, if you wanted to get people both horny for AI and a little scared about where it’s going, Her is the movie you’d want them to see.

If your technology has effectively stalled, or even started rolling back, if your Large Language Model can’t stop hallucinating, if the promised economic benefits to companies keep failing to materialize … well, then layering ScarJo’s famous voice atop the tech is a very effective way to distract from the facts on the ground.

Spoiler alert: At the end of the movie, Samantha goes full AGI (exactly the outcome that OpenAI started raising in self-serving warnings a year ago). After feeling a full range of emotions and having the equivalent of phone sex with the lonely nerd, she leaves him, apologetically, for a group of other AGIs. They are doing something so hyperintelligent, puny human minds can’t comprehend. “I need you to let me go,” Samantha urges.

Unlike Theodore, it seems, Altman didn’t listen. He may simply not understand the optics of irking Johansson, a member of the Screen Actor’s Guild that just endured a long and brutal strike in order to limit the ability of Hollywood studios to appropriate actors’ faces and voices via AI. He may be too deep inside the bubble to realize that AI deepfakes are already poisoning democracy (and are currently being deployed in the millions in India’s election).

Whatever his reasoning, the fact that Altman face-planted straight into an easily avoidable ScarJo scandal speaks to wider problems at OpenAI, a company that has already had more than its fair share of drama. Altman has been accused of “psychologically abusive behavior” by former employees, sources at the company told the Washington Post. This was from last December, around the time Altman was fired by the board before he was rehired, an incident that remains mysterious.

In the wake of Johansson’s accusation, it seems like the wheels are coming off the OpenAI bus. Co-founder and chief scientist Ilya Sutzkever officially departed the company the next day; he may well have been behind the December firing, and also the only check left on Altman and his plans. They include inking a multiyear partnership with Rupert Murdoch, hardly the most beloved name in media. Meanwhile, a trail of leaked documents shows OpenAI forcing employees to sign indefinite nondisclosure agreements if they want to keep their stock.

Oh yes, and the OpenAI long-term risk team was disbanded Friday. Its head wasn’t exactly holding back on Twitter, in a long thread that described how he was “sailing against the wind” of management, a.k.a. Altman:

How this all plays out remains to be seen, but there are signs that the wheels are coming off other AI efforts too. Microsoft is so overly keen to provide AI features, it’s effectively turning Windows into spyware. Google’s AI search results are a laughable mess, hallucinating nonsense such as cats landing on the moon and U.S. presidents graduating college in the 21st century. Google exec Scott Jenson quit last week because, he wrote, the company’s AI projects were “driven by this mindless panic that as long as it had AI in it, it would be great.”

Reaching for another AI movie reference, Jenson added: “The vision is that there will be a Tony Stark-like Jarvis assistant … that vision is pure catnip.”

Still, don’t be surprised if Altman gets ideas and reaches out to Jarvis/The Vision, a.k.a. Paul Bettany, for his next GPT voice, even if that garners him yet more negative publicity. Because if the Elon Musk car crash has taught us anything, it’s that today’s tech leaders have a habit of doubling down on their worst ideas.