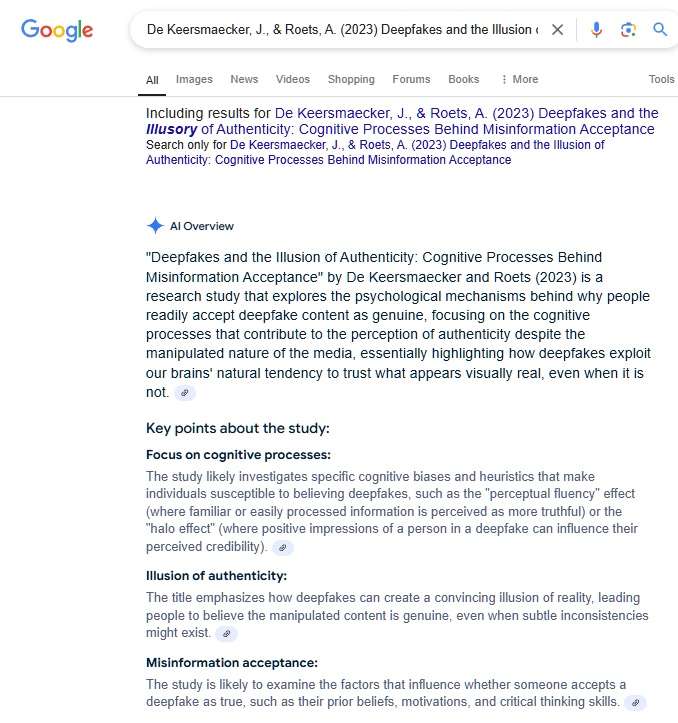

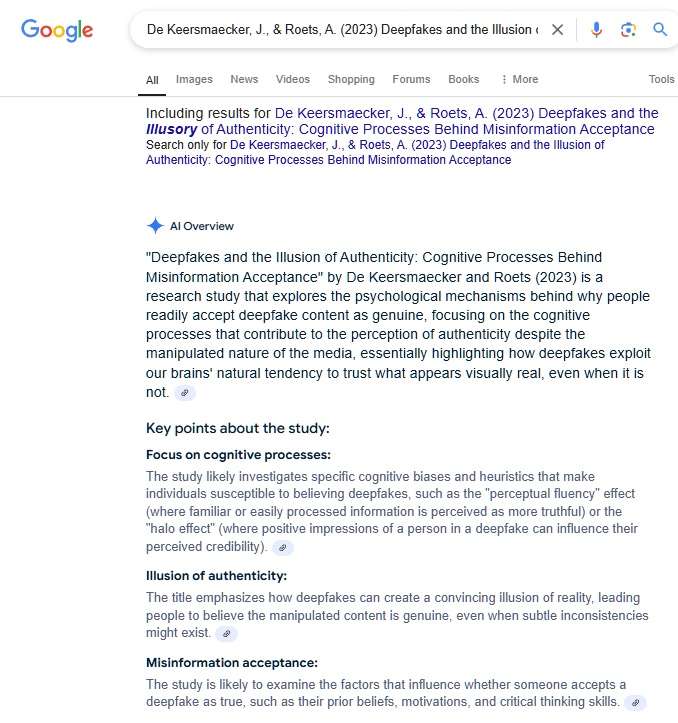

My UCLA colleague John Villasenor pointed out that Googling De Keersmaecker, J., & Roets, A. (2023) Deepfakes and the Illusion of Authenticity: Cognitive Processes Behind Misinformation Acceptance yields:

Readers of the blog may recall that De Keersmaecker, J., & Roets, A. (2023) Deepfakes and the Illusion of Authenticity: Cognitive Processes Behind Misinformation Acceptance is one of the hallucinated—i.e., nonexistent—articles cited in the AI misinformation expert’s Kohls v. Ellison declaration I blogged about Tuesday. But Google’s AI Overview seems to think there’s a there there. Indeed, perhaps that’s in part because I had included the citation in my post; human readers would realize that I gave the citation as an example of something hallucinated, but the AI software might not.

The post Androids Dreaming of Electric Sheep, or It’s Hallucinated Turtles All the Way Down appeared first on Reason.com.